You need an immediately-available spark notebook setup, to quickly carry out small experiments to clear your doubts. This is the quickest way I know of getting a pyspark notebook up and running within seconds (without headaches).

You'll only need docker, and around 4.5gb of internet to load the docker file.

Command

If you're on linux:

# create directory to mount into

mkdir -p ~/docker-mounts/pyspark-notebook

# start pyspark notebook container, and sync it to the mount directory

docker run -it --rm -p 8888:8888 -v ${HOME}/docker-mounts/pyspark-notebook:/home/jovyan --user root -e CHOWN_HOME=yes -e CHOWN_HOME_OPTS='-R' jupyter/pyspark-notebook start.sh jupyter notebook --NotebookApp.token=''

It will pull a docker image that eats up 4.91GB of disk space

If you're on windows, I don't know how to get this command working on it (I don't use windows).

Specifically, I don't know how to set -

-v- the docker mounts--user- used to fix written file permissions

If you get the command working, email me and I'll put it up here.

Accessing the notebooks

You can open the notebook server on:

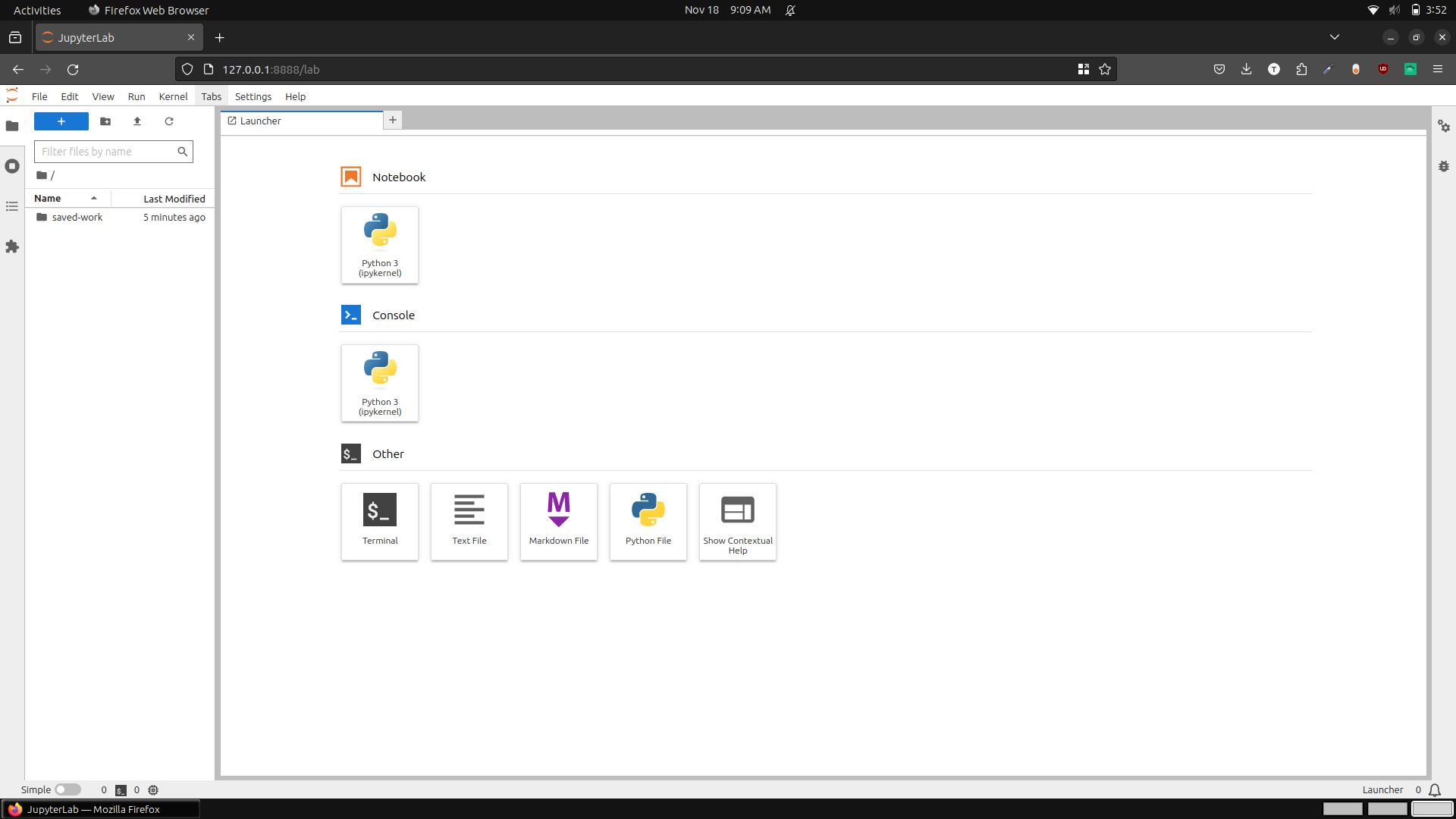

- http://127.0.0.1:8888/lab - Jupyter Lab server. Easier to upload files etc.

- http://127.0.0.1:8888/tree - Jupyter Notebook server

Notes:

- Everything in the home directory is saved to disk.

- I've disabled the authentication with the

--NotebookApp.token=''flag. - It runs spark in standalone mode. Good enough for doing small experiments.

That's it. Enjoy.